I spent six months rebuilding the same content workflows over and over.

Hebrew SEO articles? Two full days per piece. Buying guides for e-commerce clients? Start from scratch every single time. Every new project meant re-explaining context, re-writing prompts, re-fixing the same quality issues I'd already solved last week.

The cost: 60% of my time on repetitive work I'd already figured out. The frustration: knowing the problem was solved but having to solve it again anyway.

Then I stopped asking "how do I write faster with AI" and started asking the right question: "how do I build a system that remembers?"

That shift changed everything.

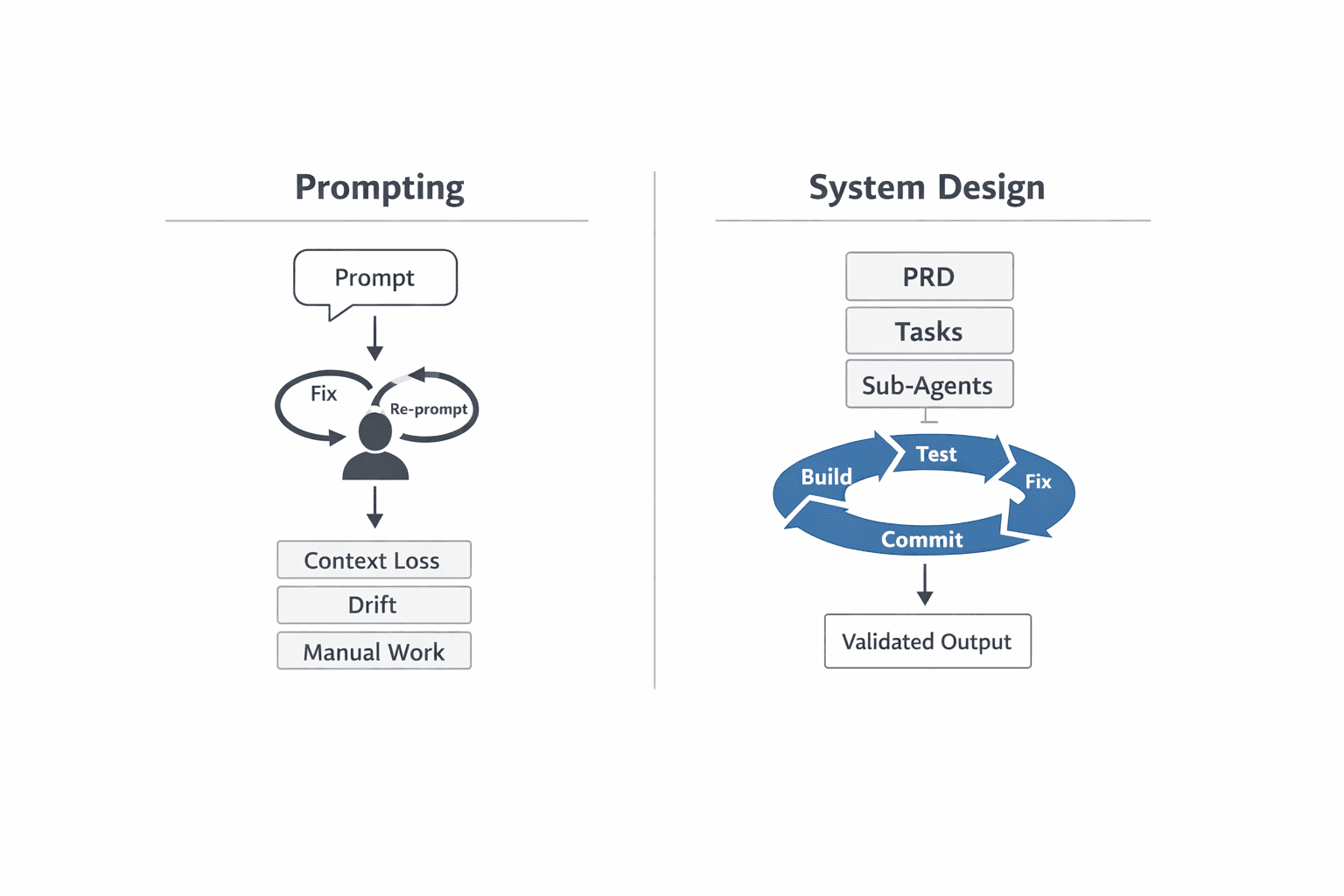

The Problem: You're Managing AI Prompt-by-Prompt

Here's what I was doing wrong (and what most people are still doing):

Running one mega-prompt that tries to handle research, strategy, copywriting, and SEO optimization all at once. Then when the output is mediocre, running it again with "more specific instructions." Repeat until it's good enough or you run out of patience.

This isn't a workflow. It's gambling with extra steps.

The symptoms:

- Inconsistent quality (good one day, garbage the next)

- No idea why something worked or didn't

- Can't answer "what would we change next time?"

- Every new content type = start over

- Zero learning from previous wins

I was treating AI like a better search engine when I should have been building infrastructure.

The Insight: Orchestration ≠ Letting AI Decide

The breakthrough wasn't a better prompt. It was understanding what orchestration actually means.

Orchestration isn't letting the agent make decisions. It's YOU calling the right sub-agent, with the right tools, with the right context, in the right flow.

Think about it: you wouldn't ask one person to handle market research, strategic positioning, copywriting, and SEO optimization. You'd have specialists. Same principle applies to AI agents.

The key insight: Control the flow, not the output.

The Solution: Design the Workflow First

Here's how I actually think about it:

Step 1: Break down the workflow into sub-agents

Don't start with code. Start with roles. For my Hebrew SEO content:

- Research agent - analyzes search intent, competitor content, market language

- Strategy agent - positions the angle, defines constraints (YMYL compliance, tone, proof points)

- Copywriter agent - drafts based on strategy and research

- SEO agent - optimizes structure, keywords, meta

- QA agent - validates output against requirements

Each agent has ONE job. That's it.

Step 2: Define what each agent needs

This is where most people mess up. Each sub-agent needs:

- Specific prompt (their job, their constraints, their output format)

- Tools/skills (research agent needs web search, SEO agent needs keyword analysis)

- Context from previous step (strategy agent gets research output, copywriter gets strategy + research)

Example: My SEO agent gets web search access and the draft. Its prompt: "Optimize this for Hebrew search intent: [target keyword]. Check structure, add internal links, verify meta tags. Output: optimized markdown."

Step 3: Validate the workflow makes sense

Before building anything, test the logic:

- Does each step have what it needs from the previous step?

- Are handoffs clear?

- Can I explain why this order matters?

If research → copywriter → strategy doesn't make sense, fix the flow first.

Step 4: Add validation where quality breaks

You don't need validation everywhere. Add it where things go wrong.

For me: Hebrew content has YMYL medical compliance issues. So I added a validator using LLM-as-judge:

"Review this health content. Flag: medical claims without sources, advice without disclaimers, promotional language in YMYL context. Output: pass/fail + specific issues."

That's it. One prompt. Catches 90% of compliance issues.

Step 5: Run it, debug, store learnings

Run the workflow. See where it breaks. Fix the prompt or the handoff.

Store what worked:

- Which research angles converted

- Which constraints improved quality

- Which validation caught real issues

That becomes your memory layer. Next run starts smarter.

Why This Works

Deterministic - Same input → same flow → consistent quality

Debuggable - When something breaks, I know which sub-agent failed and why

Cheap - Local orchestration, no API waste on full rewrites

Scalable - New content type = new set of sub-agents with the same pattern

The outcome: 2 days → 4 hours per content type. Quality stayed consistent. The system scaled without rewriting prompts.

Your First Step

Pick one workflow you do repeatedly. Content creation, data analysis, research reports - doesn't matter.

Map it out:

- What are the distinct roles? (researcher, analyst, writer, etc.)

- What does each role need? (tools, context, constraints)

- What's the handoff between them?

- Where does quality usually break?

Build the sub-agents: Write the prompt for each role. Test it. Make sure the output format matches what the next agent needs.

Add validation where it matters: Use LLM-as-judge for quality checks. "Does this meet [requirement]? Yes/No + why."

Run it: Execute the flow. Debug where handoffs fail. Store what worked.

That's orchestration.

The Shift

AI didn't eliminate the work. It moved it upstream to workflow design.

The builders who win won't be the ones with the best prompts. They'll be the ones who built systems that remember, validate, and scale.

Orchestration isn't AI magic. It's thinking like an engineer about content production.

And that thinking is how you turn 2 days into 4 hours.